How to use FreeU to boost image quality

FreeU is a simple and elegant method that enhances render quality without additional training or finetuning by changing the factors in the U-Net architecture. FreeU consists of skip scaling factors (indicated by s1 and s2) and backbone scaling factors (indicated by b1 and b2).

Skip factors

a) Aim to minimize texture over-smoothening

b) Helps to preserve details in image render

Backbone factors

a) Amplifies the feature map of the entire render

b) Lead to over smoothening of textures

c) Generates more vivid details and improves image quality

Therefore in their paper, a balance between the skip scaling factors and backbone scaling factors is essential in ensuring a good render. To find more details about FreeU,

you can click on the link here: Link

For our platform, we use the following command to call for FreeU with the following factors:

/freeu: s1, s2, b1, b2

By default, we recommend the following values of s1,s2,b1, b2 for:

– SD 1.5: s1 = 1, s2 = 1, b1 = 1.2, b2 = 1.2

– SD XL: s1 = 0.9, s2 = 0.2, b1 = 1.2, b2 = 1.4

Take note for both scaling and backbone factors, the range is between 0 and 2.

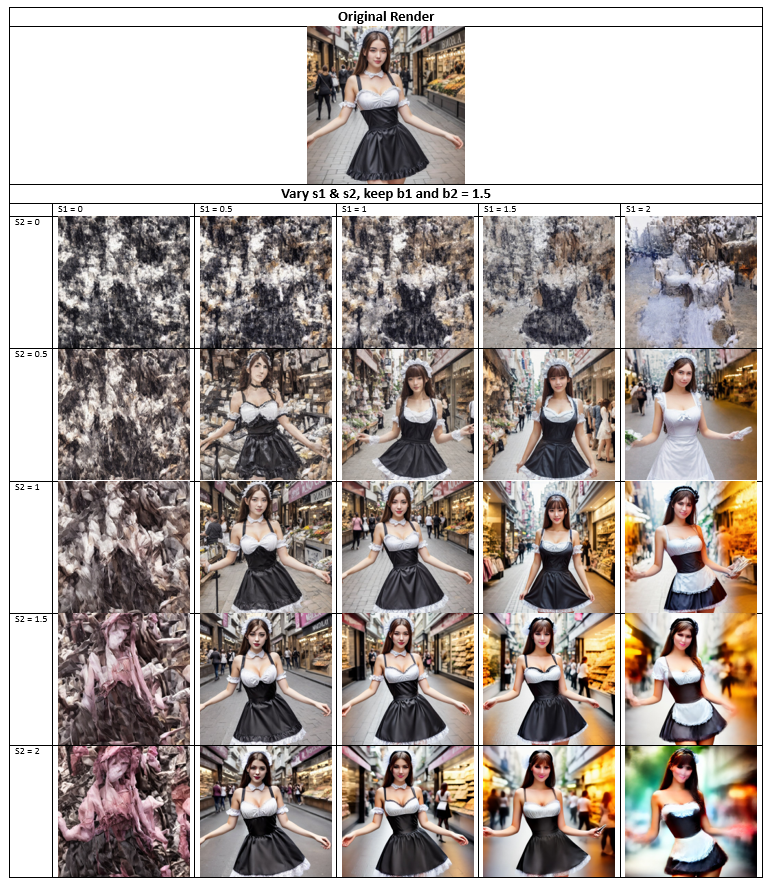

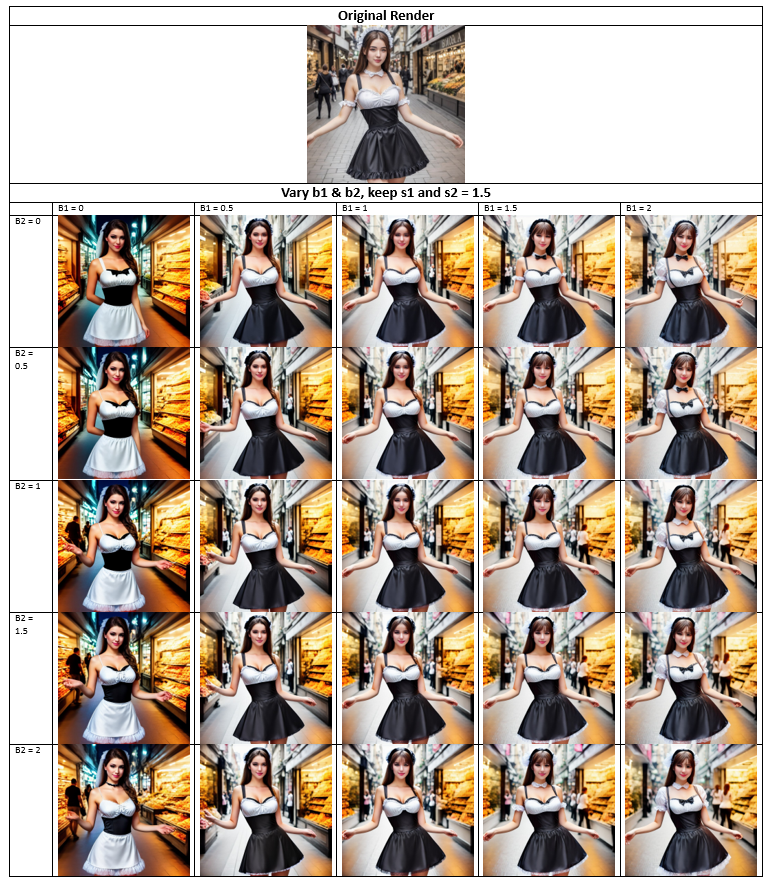

In summary from our testing, we can recommend the following (If your lazy to read through the X-Y plots):

– Adjust b1 and b2 based on your preference to get the specific feel and detail required from 0 – 2

– Adjust s1 and s2 between range of 0.8 – 1.5 (up to 1.6) to get the right colour contrast and saturation.

– Take note your values will vary depending on model and lora/embeddings used.

——————————————————————————————————————————–

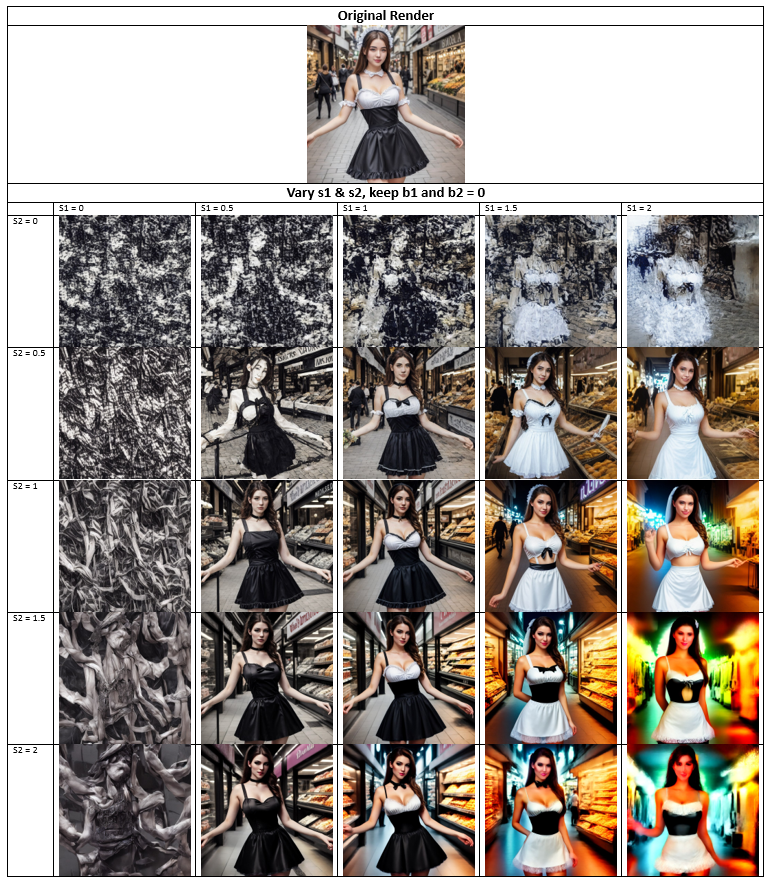

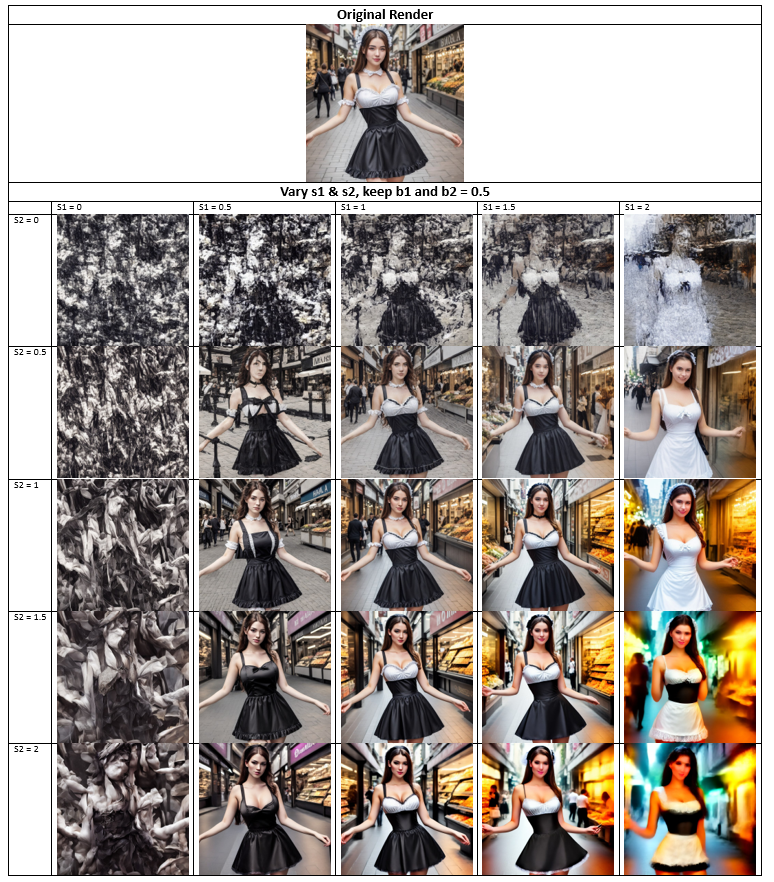

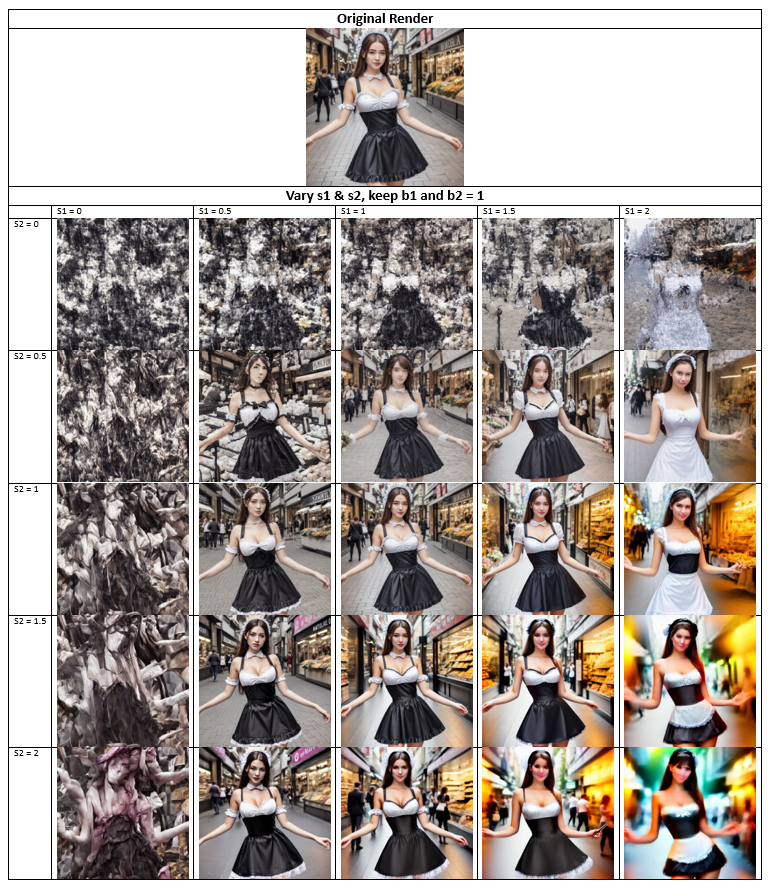

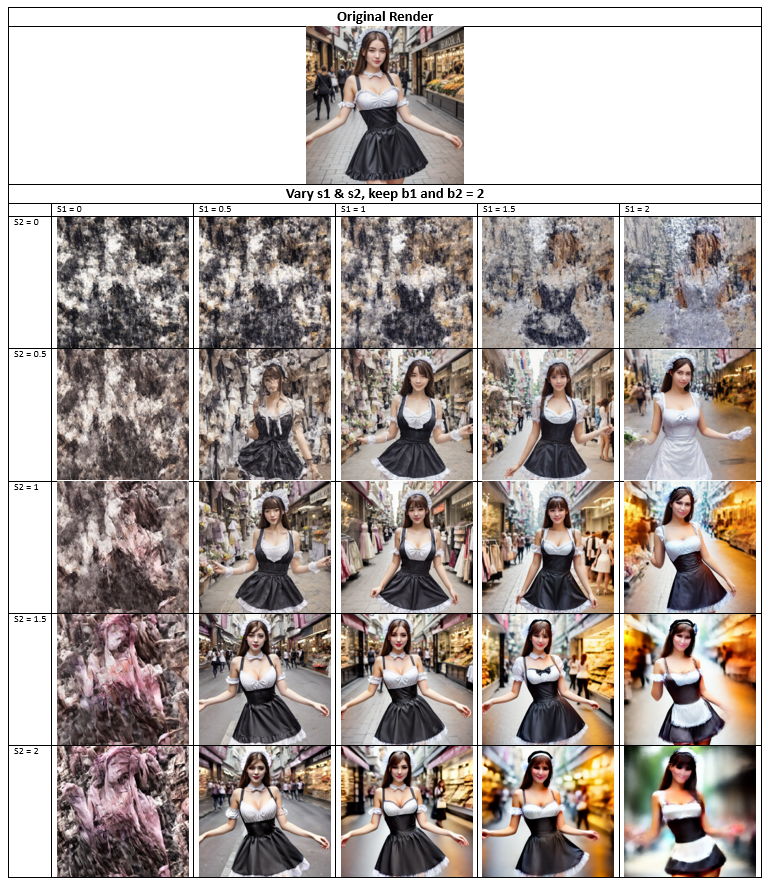

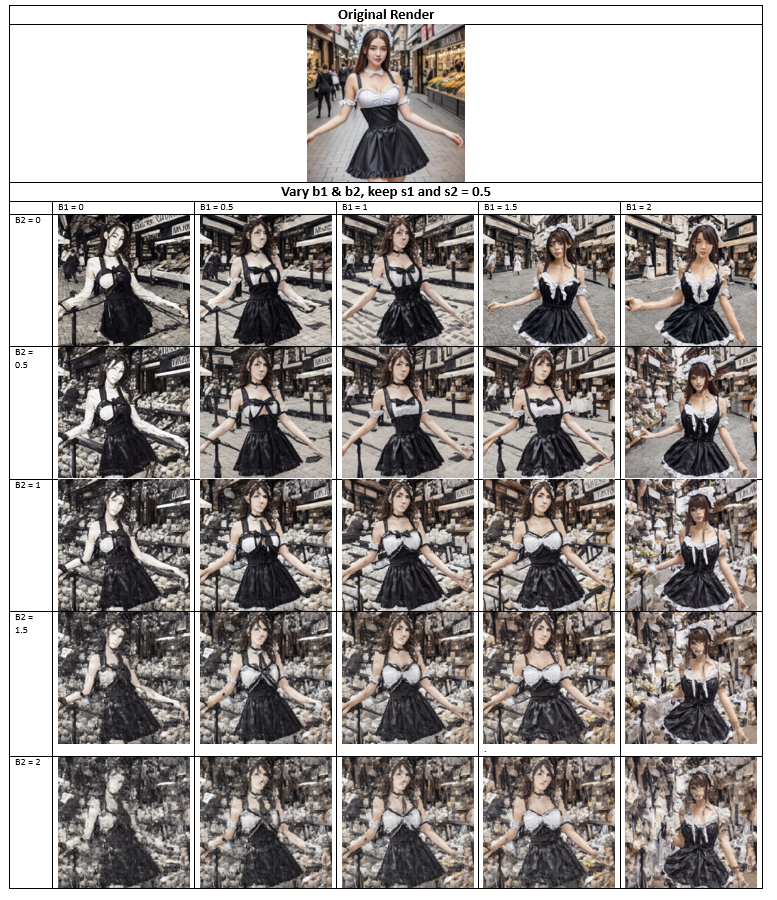

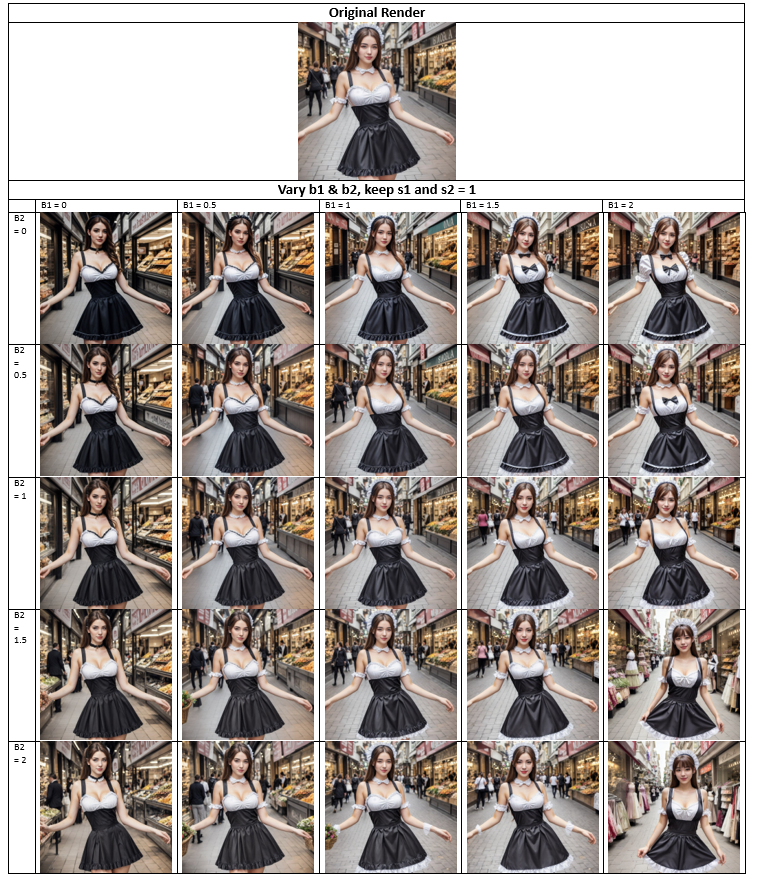

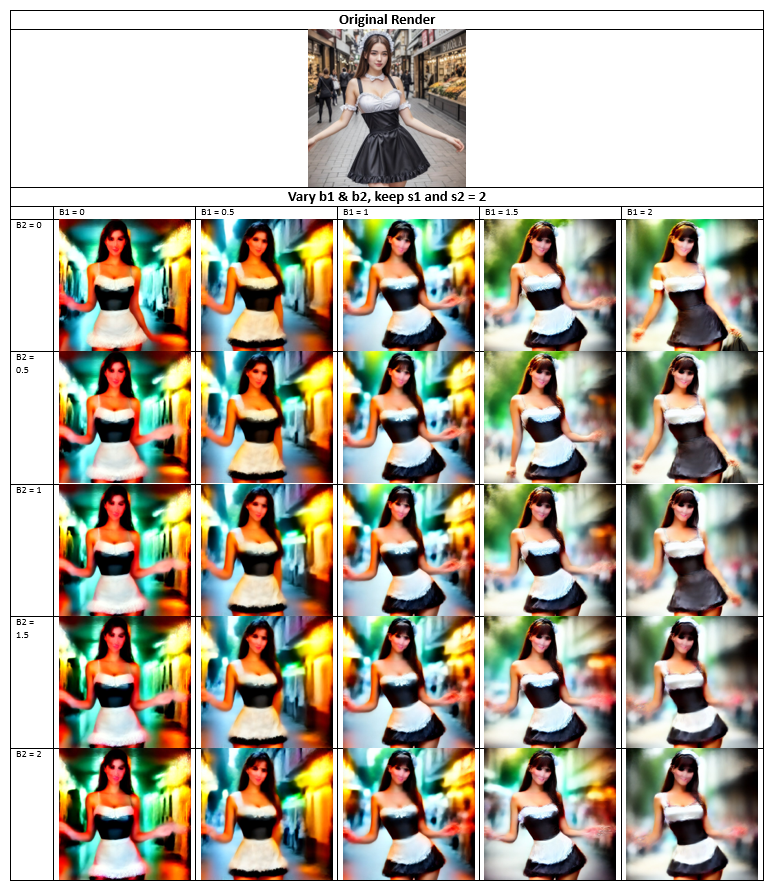

In this testing, we will showcase the power of freeu using the following prompt:

/render /size:768x768 /seed:1 /guidance:7 /sampler:dpm2m /images:2 1girl, half body shot, maid outfit, posing on shopping street , /freeu:s1,s2,b1,b2

We will be changing one set of scaling factors, either the skip and backbone and keeping the other constant to understand what happens.

If you’re ready, let’s go!

Now, we are going to change the backbone factors while keeping the skip factors constant.

Even though this uses a realistic checkpoint, the trend is still similar if a semi-real or anime-based checkpoint is used. In summary, we can observe the following trend:

a) Changing the skip factors (s1 & s2) affects colours and saturation of the render.

b) Changing the backbone factors (b1 & b2) changes the feature map of the render.